Beyond the Frame: AI Weekly Digest #4

Jan 07, 2026

This week was definitely more chill compared to the recent madness, but while the volume turned down, the quality stayed high. We are thrilled to share some huge updates, from groundbreaking video generation models to the strategic partnerships shaping the future. Here is the scoop on everything that matters this week.

LUMA RAY3 Modify

Luma just dropped a massive update to Dream Machine with Ray3 Modify, marking the end of the "slot machine" era for video-to-video editing. This update introduces precise Start and End Frame controls for the first time, allowing you to guide transitions and lock down spatial continuity across complex camera moves. The model now understands physical logic and scene-aware transformations, meaning your edits — whether rapid retouching or element swapping — maintain the narrative coherence and authentic performance of the original footage rather than looking like a filter pasted on top.

Kling Motion Control release

This week, Kling released Motion Control, a massive update that brings us closer to professional motion capture using nothing but AI. On paper, it promises precise adherence to reference videos for complex actions, finally bridging the gap between real acting and generative video. However, our internal tests reveal that the devil is in the details. While the system captures macro movements — like head turns, posture, and timing — surprisingly well, it struggles significantly when complexity ramps up. We found that micro-expressions and subtle lip-sync details often get smoothed over, fingers are still prone to morphing, and fine atmospheric elements vanish completely. It feels a lot like the early days of Kling 1.0: the potential is undeniable, but there is still a lot of work to be done before this is production-ready for complex scenes.

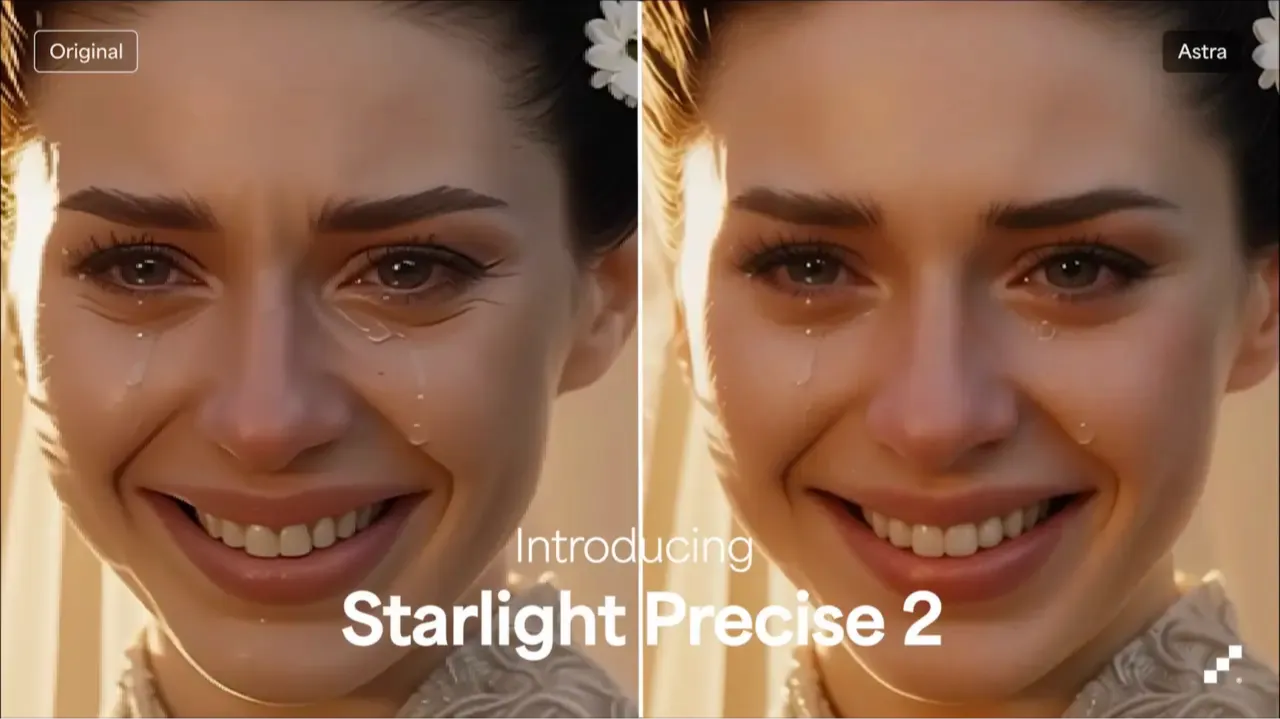

Starlight Precise 2 by Topaz Labs

Topaz Labs released Starlight Precise 2 model in Astra. Specifically engineered for people and faces, this update eliminates that uncanny "mannequin" sheen, restoring natural skin textures and fine details that other upscalers often smooth away. It excels at preserving organic grain structure even in low-light shots, preventing that overly clean "digital smear" that ruins cinematic immersion. This makes it an essential tool for narrative filmmakers who need to rescue soft footage without compromising the authenticity of the actor's performance. If you want your upscaled characters to look like living, breathing humans rather than video game assets, this model delivers next-gen realism that finally bridges the gap.

Meta's 2026 Roadmap: Mango & Avocado 🥭🥑

Meta is preparing to shake up the industry in the first half of 2026 with two new frontier models code-named Mango and Avocado. Mango is a next-gen multimodal model focused on image and video generation, positioned to compete directly with Google’s Veo, OpenAI’s Sora, and Runway, signaling Meta's serious entry into high-fidelity generative video. Meanwhile, Avocado is their upcoming LLM designed to push the limits of text-based reasoning, aiming to create smarter, more intuitive chatbot experiences that go beyond the current capabilities of the Llama series.

Can Veo 3.1 replace After Effects?

Is AI ready to replace your After Effects templates? We put Veo 3.1 to the test on cinematic title reveals, and the results are shaking up the motion graphics landscape. The model handles physics—light flares, particle dispersion, and metallic sheens—with a high-end polish that already rivals professional stock templates. However, the trade-off is control: you can’t tweak ease-in curves or dictate specific particle paths, leaving fine details to the AI's interpretation. While it’s not quite ready for complex custom animation, the workflow is clearly shifting from manual keyframing to curating the right physics, making it a massive asset for quick, high-quality project intros.

Qwen Layered: The End of Flat Images

Qwen just fundamentally changed the physics of AI generation with Qwen-Image-Layered, a model that treats images as fully editable stacks rather than flat pixels. It automatically decomposes scenes into distinct RGBA layers, allowing you to independently move, resize, or recolor objects without affecting the rest of the composition. With support for "recursive decomposition," you can even drill down into specific layers to break them apart further, finally bridging the gap between raw AI generation and professional, non-destructive design workflows. This effectively turns every generation into a pre-masked asset library, eliminating the need for complex manual rotoscoping. It bridges the gap between raster imagery and structured design, giving creators the "inherent editability" that has been missing from AI art tools until now.

Wan 2.6: Commercial Image Mastery

Wan 2.6 just expanded its arsenal with a dedicated Image model, targeting professional workflows with Commercial-Grade ID Preservation that ensures characters and styles remain consistent across campaigns. The update introduces Interleaved Text-and-Image generation for logical, layered storytelling, alongside precise controls for camera angles, lighting, and spatial depth. With the ability to blend multiple reference images and extract specific creative elements, Wan 2.6 moves beyond random generation to become a precision tool for commercial design. By supporting flexible referencing, it allows designers to mix composition from one source and color palettes from another to generate novel, high-fidelity results. This level of "logical reasoning" in image generation finally gives brands the control they need to build cohesive visual identities without relying on trial and error. Furthermore, it is becoming increasingly clear that the industry is chasing the standard set by Nano Banana Pro. We are seeing more and more models adopt the high-fidelity control and consistency features it pioneered, proving that Nano Banana Pro established the market benchmark everyone else is now trying to reach.

Adobe x Runway: The Power Couple of 2026

Adobe has officially partnered with Runway to bring high-end generative video directly into professional workflows. Starting today, Runway’s new Gen-4.5 model is available in the Adobe Firefly app, offering creators advanced control over complex scenes, realistic physics, and expressive character performance. As Runway’s preferred API partner, Adobe will provide exclusive early access to future models, allowing users to generate assets in Firefly and seamlessly refine them in Premiere Pro and After Effects—finally bridging the gap between AI experimentation and production-ready editing.

Want to go beyond weekly updates?

Our AI Filmmaking Course gives you a complete, practical workflow — from writing and design to directing and post-production. We keep the course updated as the tools evolve, so you always stay ahead.